看过这么多 tutorial,跟着 Hadoop 的 tutorial 跑一个 Hello World 是最 suffer 的一次。看过的包括但不限于 《Hadoop 权威指南》,某乎的文章,CSDN 上的经验,最后再加上官网的文档才勉强安装好跑出 WordCound 的结果……心累。

Prerequisites

GNU/Linux。这里用的是 WSL Ubuntu-20.04

Java。 这里用的是 openjdk version “11.0.13”

ssh。

Hadoop 的安装包。可以在 Apache Download Mirrors 下载,这里用的是 hadoop-3.3.2.tar.gz

JDK Enviorment Setting 最好先设置一下 Java 环境:

1 2 3 4 export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64export JRE_HOME=${JAVA_HOME} /jreexport CLASSPATH=.:${JAVA_HOME} /lib:${JRE_HOME} /libexport PATH=${JAVA_HOME} /bin:$PATH

看下效果:

输出:

1 2 3 openjdk version "11.0.13" 2021-10-19 OpenJDK Runtime Environment (build 11.0.13+8-Ubuntu-0ubuntu1.20.04) OpenJDK 64-Bit Server VM (build 11.0.13+8-Ubuntu-0ubuntu1.20.04, mixed mode, sharing)

Hadoop Installation 把 hadoop-3.3.2.tar.gz 解压到某个位置,并改名:

1 2 sudo tar -zxvf hadoop*.tar.gz -C ~/apps cd ~/apps

添加环境变量:

把 Hadoop 环境添加到最后并保存:

1 2 3 4 5 6 export HADOOP_HOME=~/apps/hadoop-3.3.2export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME /lib/nativeexport PATH=$PATH :$HADOOP_HOME /bin:$HADOOP_HOME /sbinexport JAVA_LIBRAY_PATH=/usr/local /hadoop/lib/nativeexport HADOOP_CLASSPATH=${JAVA_HOME} /lib/tools.jar

现在可以检验下 Hadoop 环境是不是设好了:

1 2 3 4 5 6 Hadoop 3.3.1 Source code repository https://github.com/apache/hadoop.git -r a3b9c37a397ad4188041dd80621bdeefc46885f2 Compiled by ubuntu on 2021-06-15T05:13Z Compiled with protoc 3.7.1 From source with checksum 88a4ddb2299aca054416d6b7f81ca55 This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-3.3.1.jar

Hadoop Configuration Hadoop 有三种运行模式: 独立(或本地)模式(Local (Standalone) Mode),伪分布模式(Pseudo-Distributed Mode)以及全分布模式(Fully-Distributed Mode)。 为了演示以及学习的目的,将 Hadoop 设置成伪分布模式。

定位到 /usr/local/hadoop/etc/hadoop 并修改 hadoop-env.sh:

1 2 export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64export HADOOP_OPTS="-Djava.library.path=${HADOOP_HOME} /lib/native"

先建好 $HADOOP_HOME/tmp/data, $HADOOP_HOME/tmp/name 和 $HADOOP_HOME/logs 然后赋权 sudo chown <username>:<group> $HADOOP_HOME/tmp, sudo chown <username>:<group> $HADOOP_HOME/logs,再修改 core-site.xml:

1 2 3 4 5 6 7 8 9 10 11 <configuration > <property > <name > hadoop.tmp.dir</name > <value > file:/home/username/apps/hadoop-3.3.2/tmp</value > <description > Abase for other temporary directories.</description > </property > <property > <name > fs.defaultFS</name > <value > hdfs://localhost:9000</value > </property > </configuration >

修改 hdfs-site.xml:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 <configuration > <property > <name > dfs.replication</name > <value > 1</value > </property > <property > <name > dfs.namenode.name.dir</name > <value > file:/home/username/apps/hadoop-3.3.2/tmp/name</value > </property > <property > <name > dfs.datanode.data.dir</name > <value > file:/home/username/apps/hadoop-3.3.2/tmp/data</value > </property > </configuration >

我们将 dfs.replication 设置为 1 ,这样 HDFS 就不会按照默认配置将文件系统块副本设置为 3。

1 2 3 4 5 6 7 8 9 10 <configuration > <property > <name > mapreduce.framework.name</name > <value > yarn</value > </property > <property > <name > mapreduce.application.classpath</name > <value > $HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value > </property > </configuration >

然后修改 yarn-site.xml:

1 2 3 4 5 6 7 8 9 10 <configuration > <property > <name > yarn.nodemanager.aux-services</name > <value > mapreduce_shuffle</value > </property > <property > <name > yarn.nodemanager.env-whitelist</name > <value > JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_HOME,PATH,LANG,TZ,HADOOP_MAPRED_HOME</value > </property > </configuration >

到此 Hadoop 配置完成。测试以下,首先开启 ssh:

第一次启动的话要将 namenode 格式化:

然后启动 Hadoop 集群:

1 2 3 4 5 Starting namenodes on [localhost] Starting datanodes Starting secondary namenodes [pos.baidu.com] Starting resourcemanager Starting nodemanagers

看一下当前所有运行的 Java 进程:

1 2 3 4 5 6 7 11379 Jps 14388 ResourceManager 14173 SecondaryNameNode 13742 NameNode 13902 DataNode 14542 NodeManager 14943 Jps

如归 NameNode 和 DataNode 未正常启动的话可以参考这里 .

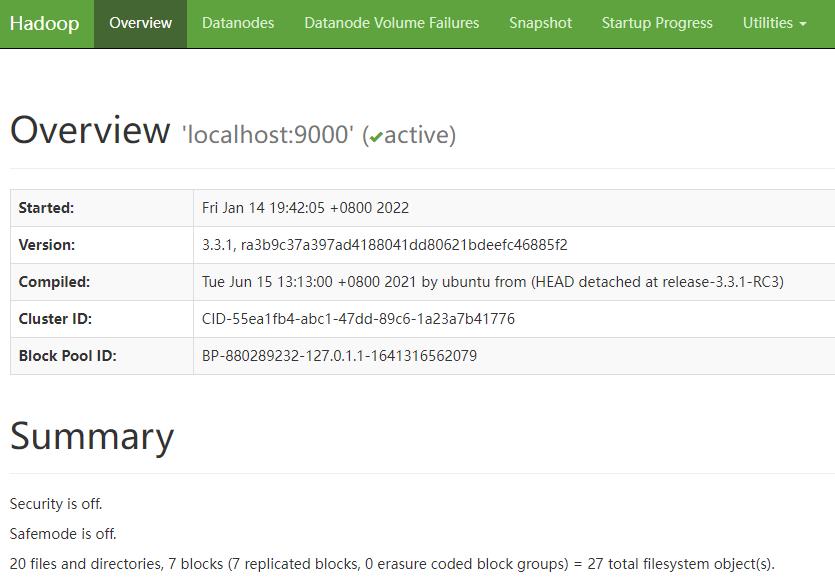

现在可以查看 Hadoop 的 web 界面了: http://localhost:9870

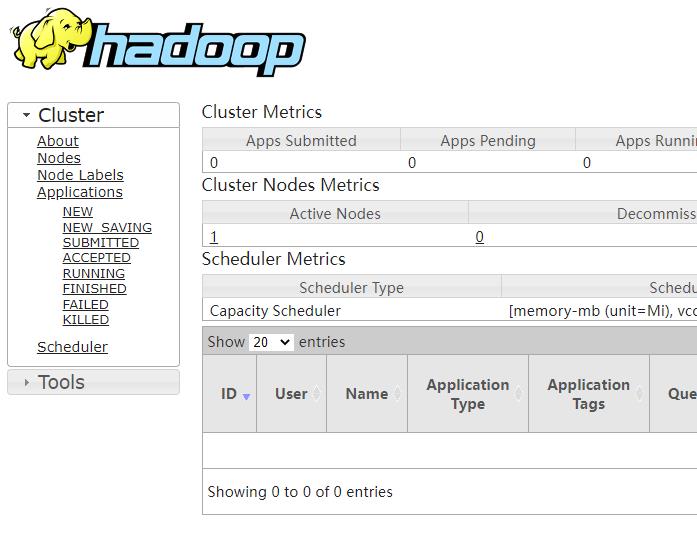

查看资源管理页面: http://localhost:8088/

WordCount 终于可以用 Hadoop 来干活了,比如写一个 WordCount。

首先准备下数据。假如:

/wordcount/input 是 HDFS 里的输入目录

/wordcount/output 是 HDFS 里的输出目录

在 HDFS 里创建 input 路径。这里先不要建 output 路径,否则后面执行 MR 任务时会因为路径存在而报错:

1 hadoop fs -mkdir -p /wordcount/input

我们将准备两个文件作为输入文件:

1 2 ## file01 Hello World Bye World

1 2 ## file02 Hello Hadoop Goodbye Hadoop

将这两个文件在 ~/wordcount/ 路径下,并导入到 HDFS:

1 hadoop fs -copyFromLocal ./file0* /wordcount/input

现在可以 Mapper 和 Reducer 的代码了。这里直接抄官方最新版:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 import java.io.IOException;import java.util.StringTokenizer;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.Path;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Job;import org.apache.hadoop.mapreduce.Mapper;import org.apache.hadoop.mapreduce.Reducer;import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;public class WordCount public static class TokenizerMapper extends Mapper <Object , Text , Text , IntWritable > { private final static IntWritable one = new IntWritable(1 ); private Text word = new Text(); public void map (Object key, Text value, Context context ) throws IOException, InterruptedException StringTokenizer itr = new StringTokenizer(value.toString()); while (itr.hasMoreTokens()) { word.set(itr.nextToken()); context.write(word, one); } } } public static class IntSumReducer extends Reducer <Text ,IntWritable ,Text ,IntWritable > { private IntWritable result = new IntWritable(); public void reduce (Text key, Iterable<IntWritable> values, Context context ) throws IOException, InterruptedException int sum = 0 ; for (IntWritable val : values) { sum += val.get(); } result.set(sum); context.write(key, result); } } public static void main (String[] args) throws Exception Configuration conf = new Configuration(); Job job = Job.getInstance(conf, "word count" ); job.setJarByClass(WordCount.class ) ; job.setMapperClass(TokenizerMapper.class ) ; job.setCombinerClass(IntSumReducer.class ) ; job.setReducerClass(IntSumReducer.class ) ; job.setOutputKeyClass(Text.class ) ; job.setOutputValueClass(IntWritable.class ) ; FileInputFormat.addInputPath(job, new Path(args[0 ])); FileOutputFormat.setOutputPath(job, new Path(args[1 ])); System.exit(job.waitForCompletion(true ) ? 0 : 1 ); } }

保存为 ~/java_file/wordcount/WordCount.java, 接下来编译这个 java 文件并创建一个 jar:

1 2 3 cd ~/java_file/wordcount/hadoop com.sun.tools.javac.Main WordCount.java jar cf wc.jar WordCount*.class

然后我们运行这段代码:

1 hadoop jar wc.jar WordCount /wordcount/input /wordcount/output

如果成功运行完成,run 出来的结果应该在 /wordcount/output 里面,我们来查看下:

1 hadoop fs -cat /wordcount/output/part-r-00000

1 2 3 4 5 Bye 1 Goodbye 1 Hadoop 2 Hello 2 World 2